Nvidia GPUs at ML Cloud

Physical architecture

Within ML Cloud, we have four types of physical GPU cards, all made by Nvidia:

- RTX 2800 Ti (PCIe) (Full information)

- V100 (Mezzanine) (Full information)

- A100 (PCIe) (Full information)

- H100 (Mezzanine) (Full information)

What's inside a GPU?

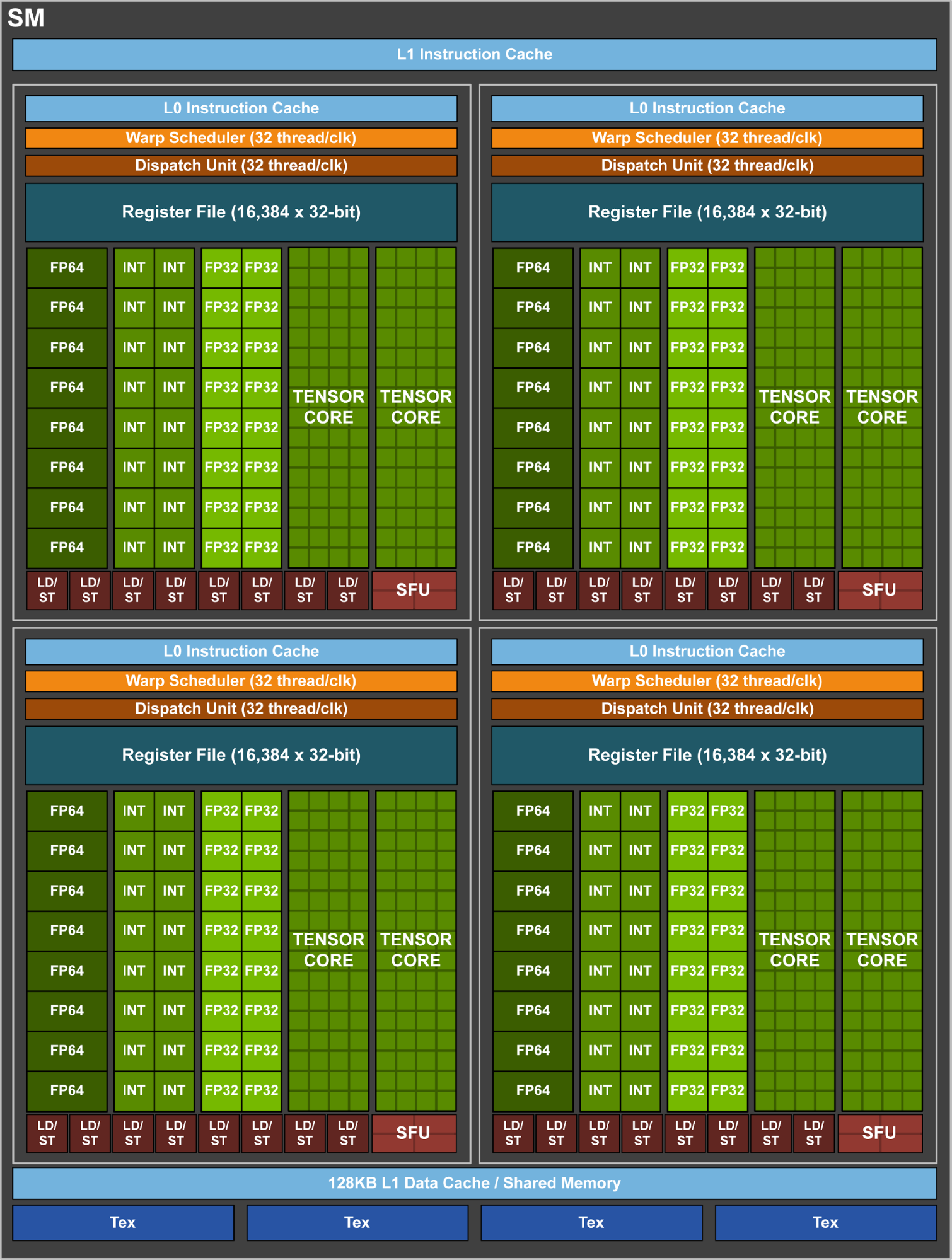

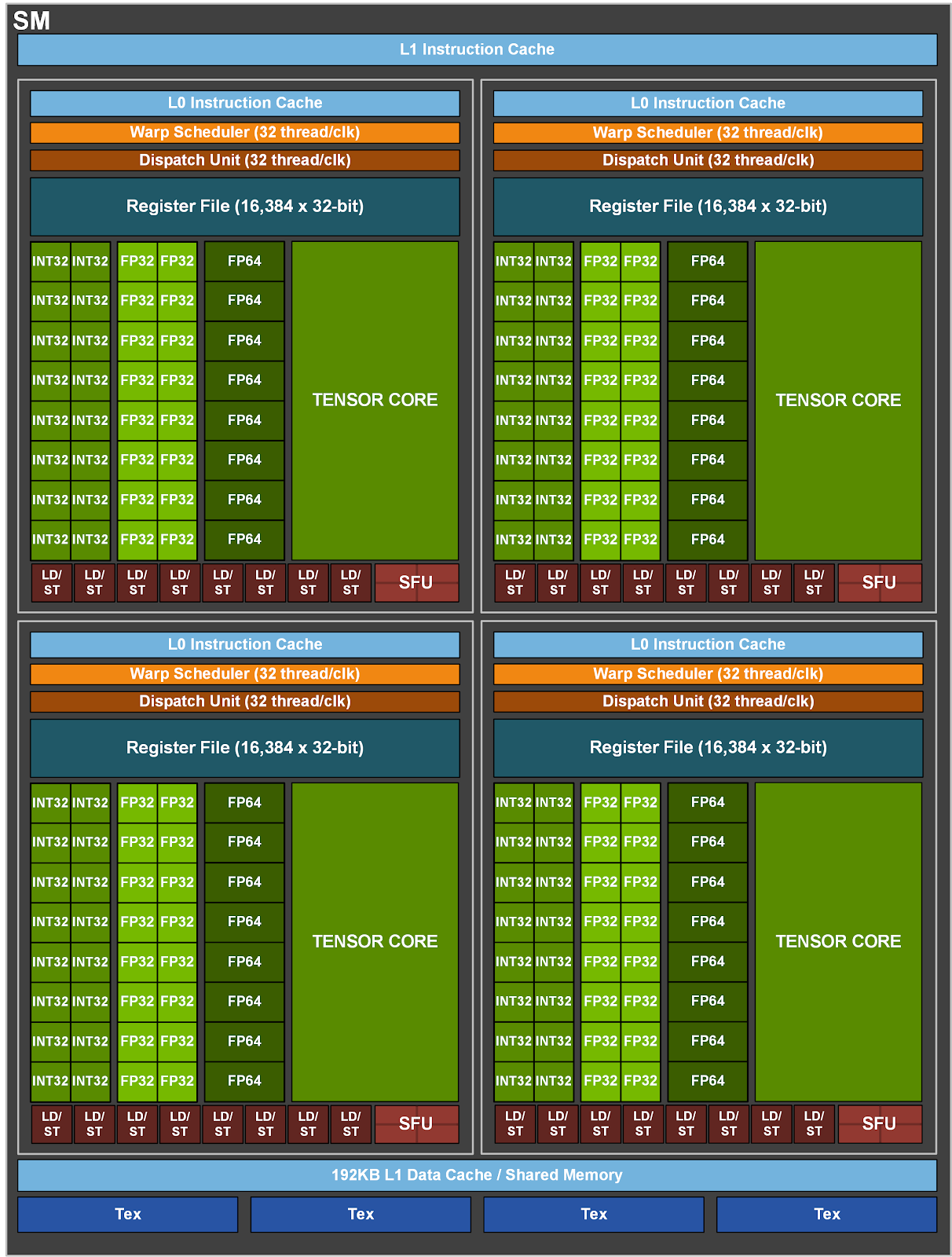

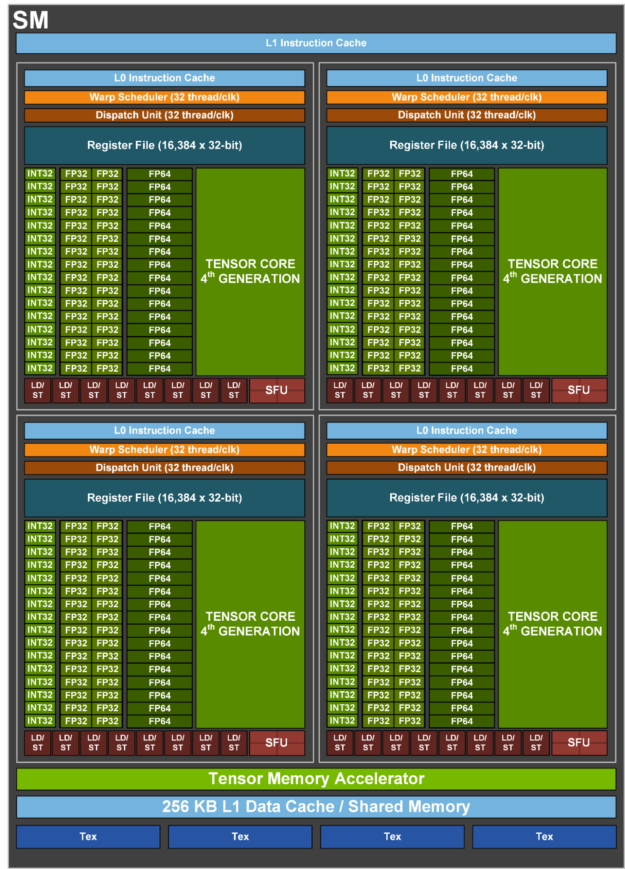

Each GPU is made of a full GPU chip, each having many SM (Streaming Multiprocessors). Within each SM are several components - including shared memory, registers, schedulers, etc. - but the most relevant component for most applications is the compute cores. Our GPUs have a variety of different amounts and kinds of compute cores within their SMs, each designed to be optimal for a certain kind of datatype (see below).

- INT32 cores are optimal for 32-bit integers.

- FP32 cores are optimal for 32-bit floating-point operations. (aka "single precision")

- FP64 cores are optimal for 64-bit floating-point operations. (aka "double precision")

- Tensor cores vary in capability for each GPU type, but provide very high performance for e.g. FP8, FP16, INT16, BF16, and other datatypes often relevant for AI applications.

Why is datatype important?

- Computational numbers are stored in registers of specific datatypes, and GPUs are optimized for specific datatypes.

Datatype is how a number is stored in computer memory. The number of bits determines how many different numbers are available. An 8-bit datatype has 2^8 = 256 possible different numbers, stored in 8 bits in memory. A 16-bit datatype has 2^16 = 65536 possible different numbers, stored in 16 bits in memory.

Computer memory stores numbers for calculation in a register of a specific datatype. A computer core performs a numerical operation on registers. While a CPU core is able to deal with a variety of different data types for general processing, a GPU core is designed to only address registers of specific sizes. Hence, a FP32 core is optimized to only perform operations on 32-bit floating point numbers.

Therefore, GPUs are most effective at performing operations on specific kinds of datatypes matching their cores. For example, the RTX 2080 Ti is very bad for processing 64-bit floating point numbers, because it does not have cores specialized for FP64 - the V100, A100, and H100 all do.

What is a Tensor Core?

- Nvidia GPUs include "Tensor Cores" designed to handle AI-relevant lower-precision datatypes (such as FP8) at extremely high speeds. More advanced Tensor cores also handle specific operations on other datatypes.

Traditionally, HPC applications have used 32-bit and 64-bit datatypes. Recently, lower-precision datatypes have become increasingly important for their applications in AI algorithms (among others). This is a field of rapid ongoing improvement, so GPUs vary not just in performance, but which datatypes are supported. More advanced Tensor cores are higher performance and support more datatype.

As Tensor cores have become more advanced, they have also increasingly gained specialized functionality for specific mathematical operations on different datatypes.

The exact benchmarks of the Tensor Cores within the ML Cloud's GPUs are currently being determined, and this document will be updated when they are known.

- Generally, the RTX 2800Ti has basic Tensor cores, the V100 has intermediate Tensor cores, the A100 has advanced Tensor cores, and the H100 has cutting-edge Tensor cores - these vary in both performance and datatype support.

Not all cores are equal

- Due to performance increases from i.a. decreasing lithographic feature sizes, newer GPUs are able to perform more calculations using the same amount of power. A smaller transistor generally requires fewer electrons to operate, so more advanced chips can perform better even if they have the same number of cores or the same power limit.

Therefore, newer GPUs will often be faster than older ones.

Comparison of physical architecture

-

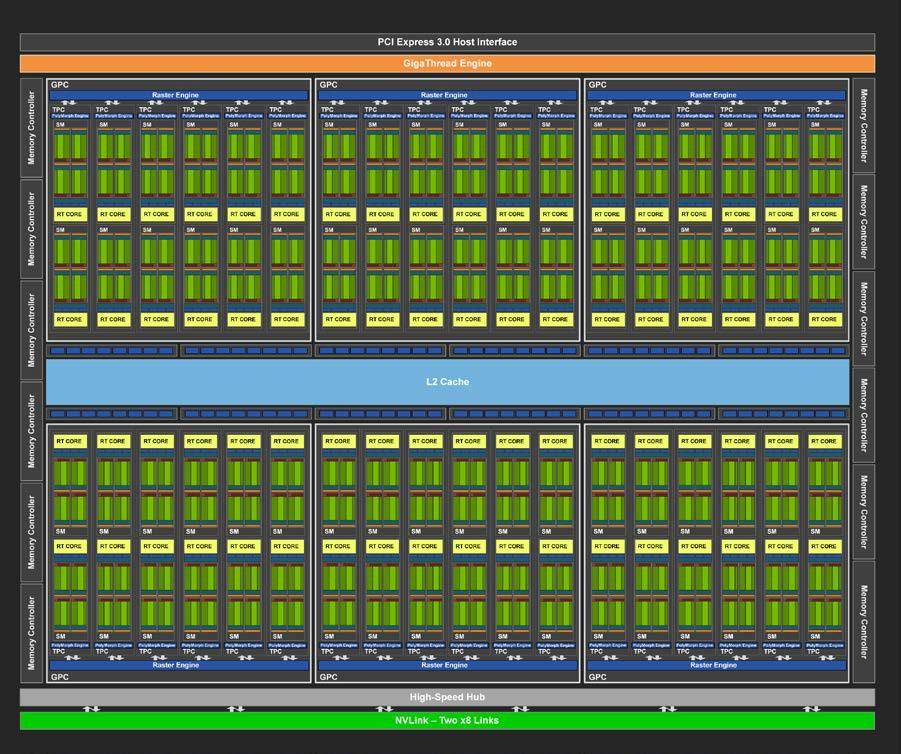

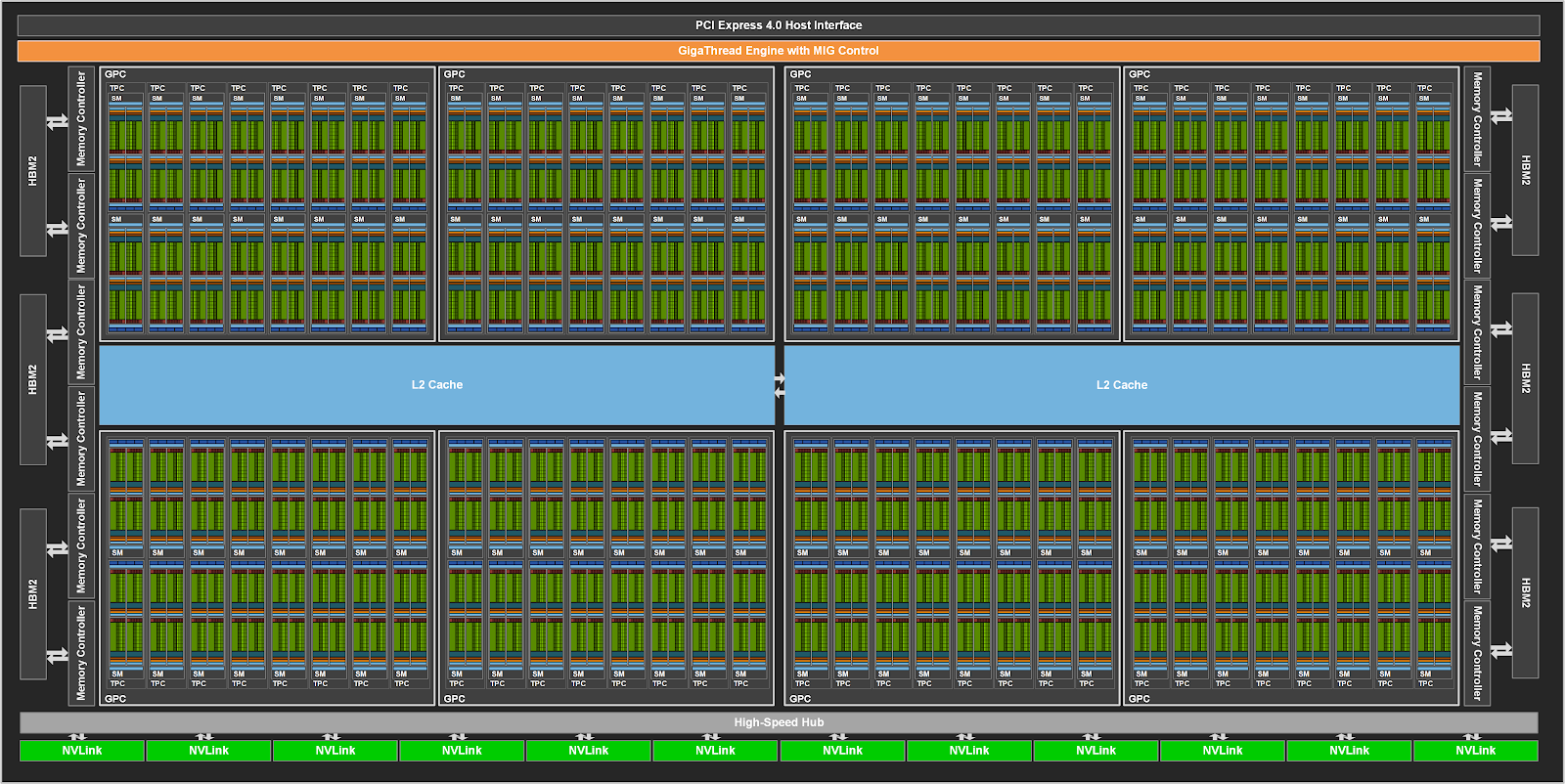

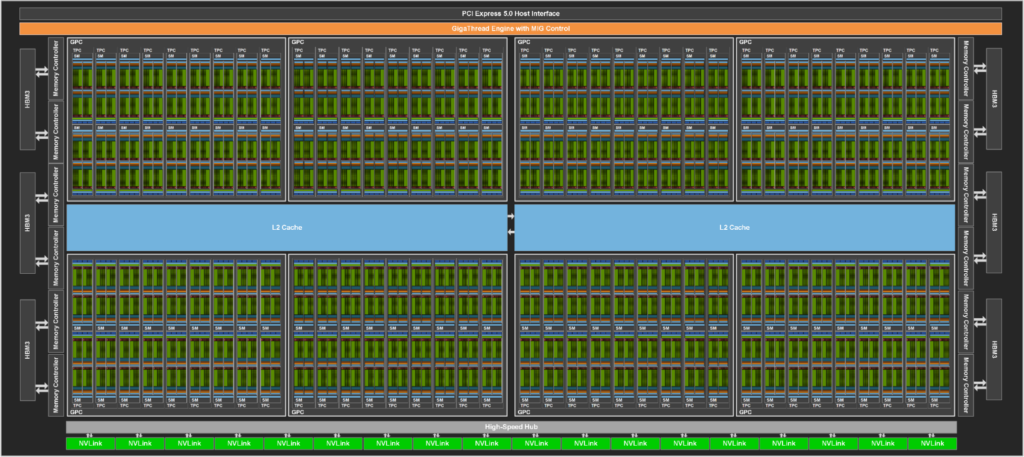

The following diagrams from Nvidia show visually the differences in each GPU's full-chip and SM. This shows the basic differences in complexity between the different GPU cards.

-

Important - Not all cores are created equal. We are still determining the exact benchmarks, but, in general, the RTX 2800Ti cores will be slower than the V100 cores, which will be slower than the A100 cores, which will be slower than the H100 cores.

RTX 2800 Ti

The RTX 2800 Ti GPU has 68 SMs. Within each SM are 64 FP32, 64 INT32, zero FP64, and 8 basic Tensor cores.

V100

The V100 GPU has 84 SMs. Within each SM are 64 FP32, 64 INT32, 32 FP64, and 8 intermediate Tensor cores.

A100

The A100 GPU has 108 SMs. Within each SM are 64 FP32, 64 INT32, 32 FP64, and 4 advanced Tensor cores.

H100

The H100 GPU has 144 SMs. Within each SM are 128 FP32, 64 INT32, 64 FP64, and 4 cutting-edge Tensor cores.